Introduction

In 2013, researchers at DeepMind published the paper “Playing Atari with Deep Reinforcement Learning”, in which deep Q-learning was applied to play seven Atari 2006 games and remarkable results were demonstrated. In 2015, their paper “Human-level control through deep reinforcement learning” came out in Nature, in which they applied the same model to 49 different games and achieve superhuman performance in half of them.

Compared to the traditional Q-learning algorithm, deep Q-learning algorithm uses a convolutional neural network to approximate the Q(s,a) function with stochastic gradient descent to update the weights.

Experience Replay

Approximation of Q-values using non-linear functions is not very stable because of the correlated data and non-stationary distributions. To make it converge, one important trick is experience replay ([1]), in which agent’s experiences (s, a, r, s') are stored in a replay memory. When training the network, random minibatches from the replay memory are used instead of the most recent transition. This alleviate the correlation of consecutive training samples, which otherwise might drive the network into a local minimum. One thing to note is that it is necessary to learn off-policy

when learning by experience replay.

Deep Q-learning Algorithm

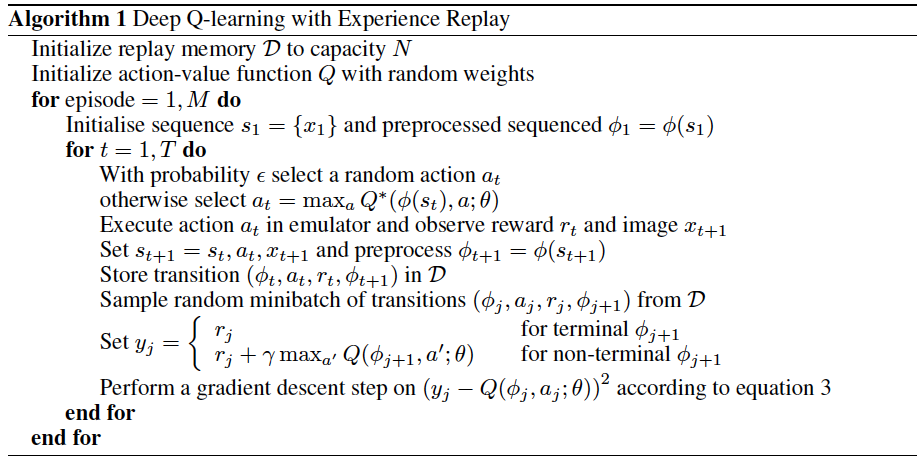

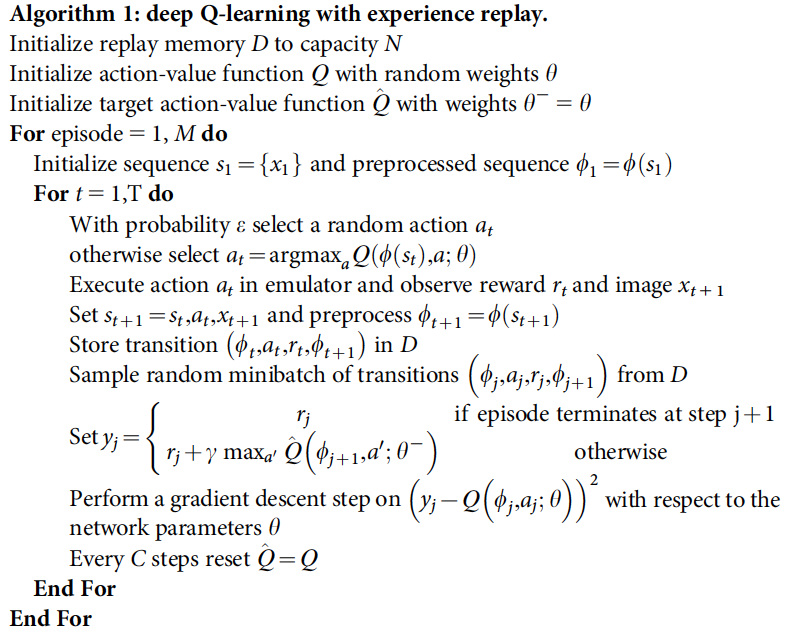

Fig 1 is the deep Q-learning algorithm with experience replay proposed in paper “Playing Atari with Deep Reinforcement Learning”. In their nature paper ([2]), another modification has been made to further improve the stability of the algorithm. Apart from the neural network to approximate the Q(s,a) function, a separate neural network is used to generate the target $y_i$ in the Q-learning update. Fig 2 is the modified algorithm. We can see that, for every C updates, network $Q$ is cloned to obtain the target network $\hat{Q}$ and $\hat{Q}$ is used for generating the targets for the following C updates.

To further improved the stability, they also found it helpful to clip the TD error $r + \gamma max_{a’} Q(s’, a’;\theta_{i}^-) - Q(s,a;\theta_i)$ to be between -1 and 1.

Fig 1: Algorithm 1 from [1]

Fig 2: Algorithm 1 from [2]

References

- Mnih, et al. “Playing atari with deep reinforcement learning.” arXiv preprint arXiv:1312.5602 (2013).

- Mnih, et al. “Human-level control through deep reinforcement learning.” Nature 518.7540 (2015): 529-533.